AI is on everyone’s mind. And it always leads to a follow-up question: how secure is this new-fangled AI tool?

If you’re interested in implementing a chatbot for a company, then chatbot security should be top of mind.

In this article, I’ll walk you through all the key chatbot security risks, as well as the precautions your organization needs to take when implementing an AI chatbot or AI agent.

Are chatbots secure?

Not all chatbots are secure – you never know the safety precautions that went into its development. However, it is possible to build and deploy a secure chatbot with the right tools.

Chatbot security is a wide-ranging topic, as there are a myriad of ways to build a chatbot or AI agents, as well as endless use cases. Each of these differences will bring a unique security aspect you’ll need to consider.

What can I do to avoid chatbot risks?

Security is an important part of your chatbot project – your safety strategy cannot be half-baked.

If you’re out of your depth, enlist an expert. Most organizations building and deploying a chatbot are guided by AI partners: organizations with expertise in a specific type of AI project. If you’re building a chatbot, you can check out our list of certified experts, a list of freelancers and agencies that are well-versed in building, deploying, and monitoring secure chatbots.

Otherwise, educate yourself on the risks relevant to your chatbot and take all necessary steps to prevent unwanted outcomes.

I’ve made this a bit easier for you by compiling the most common security risks of chatbots and providing information on how to combat each risk.

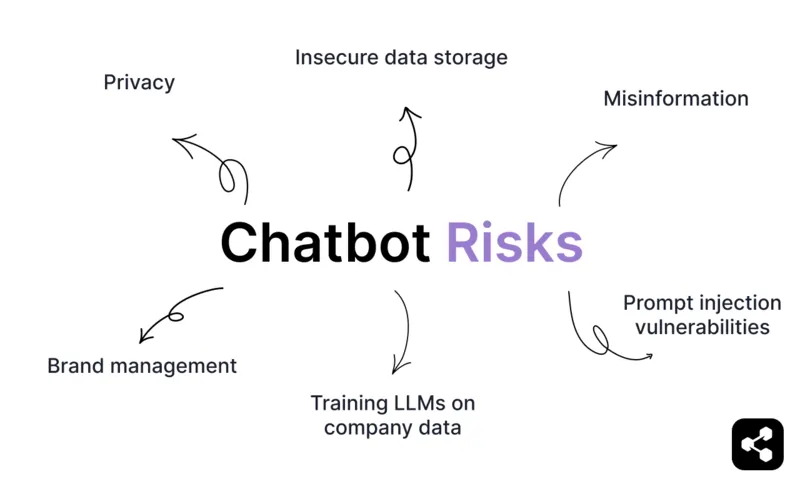

Chatbot Risks

Privacy & Confidential Information

Chatbots are often handling personal data, like names, email addresses, or financial details. That means they become a privacy risk when they handle sensitive user data without robust safeguards.

This is especially important in medical chatbots, chatbots that process payments of any kind, finance chatbots, banking chatbot, or any enterprise chatbots handling sensitive data.

If this data is stored insecurely or transmitted without encryption, it becomes vulnerable to breaches – then exposing businesses to significant legal, financial, and reputational risks.

Misinformation and Hallucinations

Chatbots powered by LLMs – if improperly built – are at risk of spreading misinformation.

Take the infamous Air Canada chatbot fiasco. A passenger was informed by the company’s website chatbot that he could apply for bereavement rates for a flight after his grandmother had passed.

After reaching out to receive the reimbursement, the customer was told the policy only didn’t apply to completed travel. The company admitted the chatbot had used ‘misleading words’, and the case went to court.

These kinds of hallucinations don’t just embarrass brands – they cost them.

But it’s possible to build chatbots that stay on-topic and on-brand. One of our clients, a health coaching platform, was able to reduce manual ticket support by 65% with a chatbot. Over its 100,000 conversations, the company found zero hallucinations.

How? Retrieval-augmented generation (RAG) plays a big role in most enterprise chatbots. Instead of generating freeform responses, RAG combines the chatbot’s generative capabilities with a database of verified, up-to-date information. This ensures responses are grounded in reality, not assumptions or guesswork.

Enterprise chatbots include other security guardrails that are necessary before public deployment – we’ll get to them below.

Insecure Data Storage

If your chatbot is storing data in servers or a cloud environment, inadequate security protocols can leave it exposed to breaches.

Outdated software, misconfigured servers, or unencrypted data can be exploited by attackers to gain access to sensitive user information.

For instance, some chatbots store data backups without proper encryption, making them vulnerable to interception during transfer or unauthorized access.

Prompt Injection Vulnerabilities & Malicious Output

If you deploy a weak chatbot, it might be sensitive to destructive prompts.

For example, if your chatbot is helping sell your dealership’s vehicles, you don’t want it to be selling a truck for $1. (See the infamous Chevy Tahoe incident.)

Chatbots can produce harmful or nonsensical responses if their outputs aren’t properly controlled. These errors can stem from inadequate guardrails, lack of validation checks, or manipulation by users.

However, this is one of the easier security risks to avoid. Strong chatbots will use conversation guardrails to prevent off-topic or off-brand conversations before they take place.

Training LLMs on Company Data

Training chatbots on company data can pose privacy and security risks, especially with general-purpose platforms like ChatGPT. When using company information with general purpose chatbots, there’s always a risk of leaking data.

Custom chatbots, on the other hand, make it much easier to protect your data. Enterprise-grade chatbot platforms are typically designed with data isolation and security in mind. These chatbots are trained within controlled environments, significantly reducing the risk of data leakage.

Brand Management

The biggest public chatbot fiascos have centered on brand management. How well does your chatbot represent your brand? This is at the heart of chatbot security.

Chatbots are often the first touchpoint customers have with your business, and if their responses are inaccurate, inappropriate, or off-tone, they can harm your brand's reputation.

Again, this is a risk that can be avoided with conversation guardrails and conversation design.

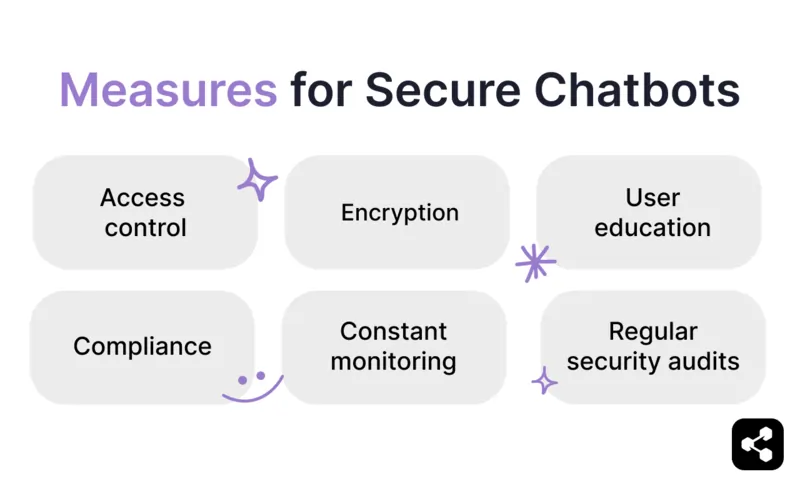

Necessary Safety Measures for Secure Chatbots

Access Control & Secure User Access

If it’s a tool used by the masses, you don’t always want everyone to have the same level of access.

Authentication verifies who the user is — ensuring that only legitimate users can log in. Once authenticated, authorization determines what each user is allowed to do, based on their role or permissions.

A key part of this is role-based access control (RBAC), which ensures users only access the information and features they need to perform their roles. This means:

- Limiting sensitive data access to authorized personnel.

- Restricting chatbot editing capabilities to admins.

- Defining user roles with clear, enforceable permissions.

By implementing RBAC alongside secure authentication and authorization protocols, you can minimize risks like unauthorized access, data leaks, and accidental misuse. It’s a simple yet essential guardrail for secure chatbot deployment.

Regular Security Audits

Like any other high-performance software, chatbot software should undergo regular security audits.

Regular security audits are comprehensive reviews of your chatbot's architecture, configurations, and processes to ensure they meet security standards and industry best practices.

These audits typically involve testing for vulnerabilities – such as weak authentication protocols, misconfigured servers, or exposed APIs – as well as evaluating the effectiveness of existing security measures like encryption and access controls.

Audits also assess compliance with data protection regulations, ensuring that your chatbot adheres to frameworks like GDPR or SOC 2.

This process often includes:

- Penetration testing to simulate potential attacks

- Code reviews to spot hidden flaws

- Monitoring for unusual activity

Security audits are a proactive measure to evaluate your chatbot's resilience against threats and verify its ability to handle sensitive information securely.

Encryption

Encryption is the process of converting data into a secure format to prevent unauthorized access. For sensitive data, this includes two primary types: data-at-rest encryption, which protects stored information, and data-in-transit encryption, which secures data while it's being transmitted.

Using strong encryption protocols like AES (Advanced Encryption Standard) ensures that even if data is intercepted, it remains unreadable.

For chatbots handling sensitive information, encryption is a non-negotiable guardrail to safeguard user privacy and maintain compliance with security standards.

Constant Monitoring

Our Platform-as-a-Service suggests 3 stages of chatbot implementation: building, deploying, and monitoring.

Customers tend to forget the final stage when building out their initial plans, but monitoring is the most important step of all.

This includes:

- Tracking performance metrics

- Identifying vulnerabilities

- Addressing issues like hallucinations or data leaks

Regular updates and testing help ensure your chatbot adapts to evolving threats and stays compliant with industry regulations.

Without proper monitoring, even the most well-built chatbot can become a liability over time.

Compliance

If your chatbot will be handling sensitive data, you need to opt for a platform that complies with key compliance frameworks.

The most common and relevant compliance frameworks include:

- GDPR: General Data Protection Regulation

- CCPA: California Consumer Privacy Act

- HIPAA: Health insurance Portability and Accountability Act

- SOC 2: System and Organization Controls 2

If you’ll be processing data from individuals in the EU, you’ll need to have a GDPR-compliant chatbot.

To fully comply, it will require both a) a platform that follows proper compliance measures and b) some work from your chatbot builders (like how you handle data once it’s received by your chatbot).

User Education

Sometimes it’s not the fault of the technology – it’s a lack of understanding from its users.

An important part of instituting chatbot technology is properly preparing your employees for new risks and challenges (along with the myriad of benefits).

Educate your employees on how to incorporate the chatbot into their work without risking your company’s reputation. Ideally, your chatbot will be built with sufficient guardrails that it should be near-impossible to misuse it.

Deploy the Safest Chatbot on the Market

Security should be top-of-mind for your company’s chatbot investment.

Botpress is an AI agent and chatbot platform used by 35% of Fortune 500 companies. Our state-of-the-art security suite allows for maximum control over your AI tools.

Our private LLM gateway, privacy shield, built-in safety agent, and brand protection framework ensure that our clients experience the most secure chatbot experience on the market.

Start building today. It’s free.

Or book a call with our team to get started.

FAQs

1. How do chatbots store data?

Chatbots store data in secure databases – typically cloud-based – using encryption both at rest and in transit. The stored data may include conversation logs and metadata, and is often structured to support personalization or regulatory compliance.

2. How can I ensure a secure chatbot?

For a secure chatbot, implement HTTPS for all communications, encrypt stored data, authenticate API calls, and restrict access using role-based permissions. You should also regularly audit the system and comply with data privacy laws such as GDPR or HIPAA.

3. What is chatbot safety?

Chatbot safety is the set of practices that protect users and systems from risks such as data breaches, misinformation, social engineering attacks, or abusive interactions. It includes enforcing content filters, securing data channels, validating inputs, and enabling human fallback where needed.

4. What is the most secure chatbot?

The most secure chatbot is one deployed with end-to-end encryption, strict access controls, on-premise or private cloud options, and compliance with certifications like SOC 2, ISO 27001, or HIPAA.

.webp)