- Released 08/07/2025, GPT-5 unifies advanced reasoning, multimodal input, and task execution into a single system, eliminating the need to switch between specialized models.

- GPT-5 is designed for complex, multi-step workflows.

- GPT-5 significantly reduces hallucinations compared to earlier versions.

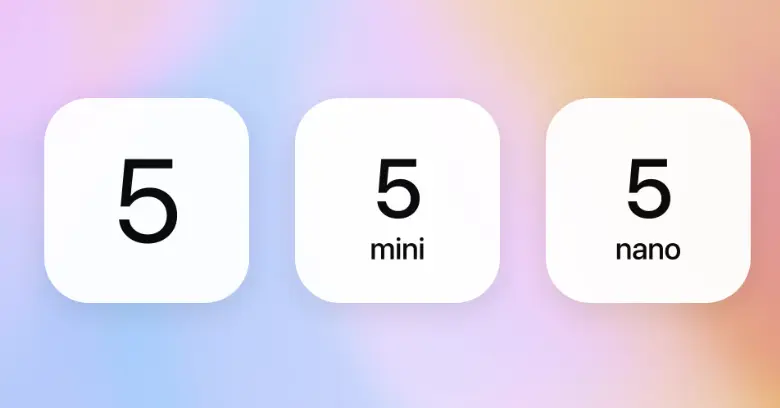

- The variants of GPT-5 include:

gpt-5,gpt-5-mini,gpt-5-nano, andgpt-5-chat.

Over the past year, OpenAI introduced GPT-4o, o1, and o3, each improving how AI thinks, reasons, and interacts.

These models made AI responses faster, more accurate, and more intuitive. But each was just a step toward something bigger.

On August 6th, OpenAI announced — not-so-subtly — the imminent launch of GPT-5.

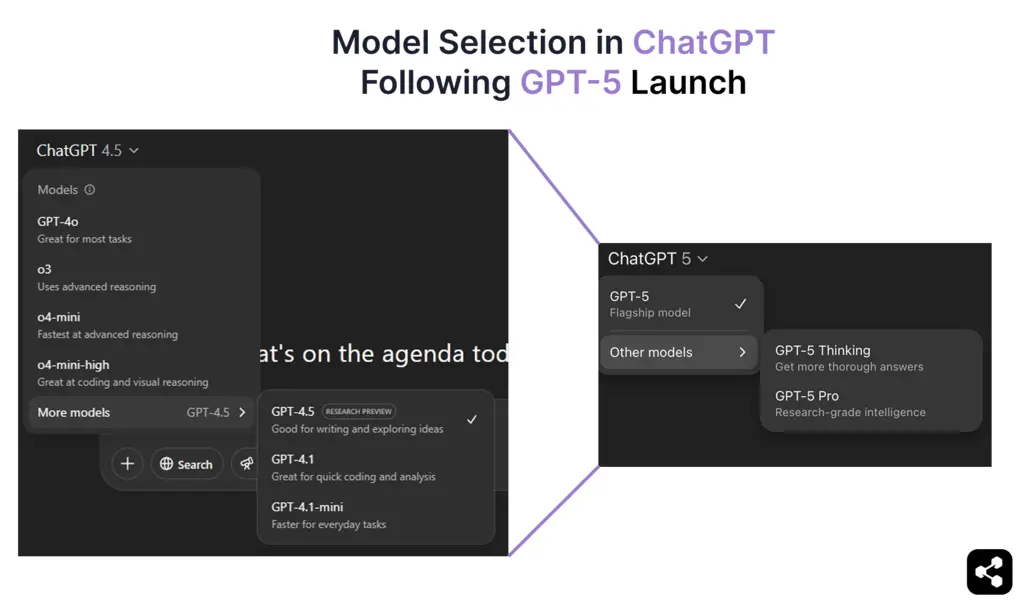

And now, as of August 7, 2025, GPT-5 has officially arrived, bringing together OpenAI’s most advanced reasoning and multimodal capabilities in one unified model. GPT-5 is now the default model in ChatGPT for all free and paid users, replacing GPT-4o entirely.

In this guide, I’ll break down everything confirmed about GPT-5: its capabilities, performance upgrades, training process, release timeline, and cost.

What is GPT-5?

GPT-5 is OpenAI’s latest-generation large language model, officially released on August 7, 2025. It builds on the GPT architecture while integrating advancements from reasoning-first models like o1 and o3.

Before GPT-5, OpenAI rolled out GPT-4.5 (Orion) inside ChatGPT — a transitional model that sharpened reasoning accuracy, reduced hallucinations, and laid the groundwork for the deeper chain-of-thought execution now native to GPT-5.

Many of the capabilities hinted in the past — such as stepwise logic, better context retention, and smoother multimodal switching — are now fully realized and unified in GPT-5.

GPT-5 runs as part of a unified adaptive system. A new real-time router automatically chooses between a fast, high-throughput model for routine queries and a “thinking” model for complex reasoning, eliminating the need to manually switch between specialized models.

What are the different GPT-5 models?

GPT-5 is a series of models — a family of specialized variants optimized for different use cases, ranging from applications of ChatGPT to large-scale deployments via the API.

Each GPT-5 variant runs on the same unified architecture but is tuned for a specific balance of knowledge cut-off, reasoning depth, speed, and operational scale.

These variants unify OpenAI’s reasoning-first direction with targeted performance tuning, giving developers the flexibility to match model choice to workload complexity and deployment scale.

How does GPT-5 perform?

With GPT-5 officially released on August 7, 2025, we’re now seeing how its architecture handles real-world use across reasoning, multimodality, and agent-style task execution.

Sam Altman had previously hinted that GPT-5 would move beyond just being “a better chatbot” — and based on early usage, that’s exactly what it delivers.

Reasoning that adapts in real time

A built-in routing system decides when to answer instantly and when to think in steps. For complex queries, GPT-5 moves into a chain-of-thought process with embedded prompt-chaining, mapping out intermediate steps before giving a final answer.

This makes GPT chatbots built on GPT-5 better at sustained problem-solving — from multi-stage code debugging to layered business analysis — without requiring separate models or mode switching.

Context handling at scale

In ChatGPT, the model can hold around 256,000 tokens in memory; through the API, that expands to 400,000. This enables work across entire books, multi-hour meeting transcripts, or large repositories without losing track of earlier details.

Across long sessions, how accurate ChatGPT responses are has noticeably improved, with fewer contradictions and stronger retention of earlier context.

Better language support for global market

GPT-5’s unified architecture brings a major leap in multilingual and voice capabilities. ChatGPT can now handle a wider ChatGPT support for languages with higher translation accuracy and fewer context drops across extended conversations.

These gains extend to voice interactions. Responses sound more natural across accents and speech patterns, making multilingual GPT chatbots just as fluid in spoken Spanish, Hindi, Japanese, or Arabic as they are in text.

From Chatbot to AI Agent

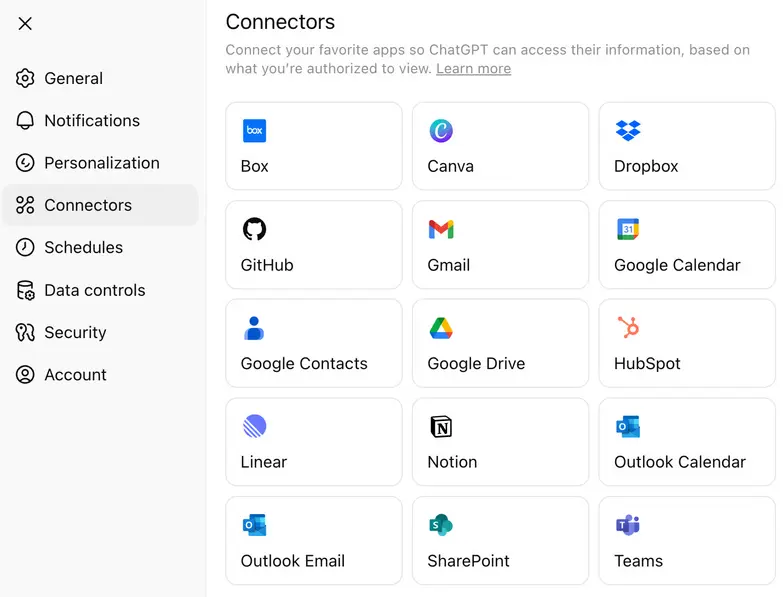

With GPT-5, OpenAI has expanded its approach to application connectors — native integrations that let the model interact directly with external tools, CRMs, databases, and productivity suites.

By routing tasks to lightweight connectors instead of pushing every step through high-cost reasoning calls, teams can reduce API spend while still keeping complex logic available when it is needed.

How much does GPT-5 cost?

GPT-5 is available through ChatGPT subscriptions and the OpenAI API, with pricing that varies by variant. For API users, GPT-5 is offered in several variants — gpt-5, gpt-5-mini, and gpt-5-nano — priced per million input and output tokens.

Apart from API pricing, OpenAI has confirmed that GPT-5 is available across multiple ChatGPT tiers, making it accessible to free users while unlocking advanced capabilities for paid plans:

- Free Tier – GPT-5 with standard reasoning capabilities and daily usage limits.

- Plus Tier – Increased usage limits and improved reasoning performance.

- Pro Tier – Access to GPT-5 Pro, the high-reasoning “thinking” variant with extended context windows, faster routing, and priority access to advanced tools.

OpenAI’s pricing model allows developers to choose between maximum reasoning depth, faster latency, or cost efficiency depending on their needs.

How can I access GPT-5? (Hint: It depends on what you're trying to do)

If you just want to chat with GPT-5, you can do that directly in ChatGPT starting August 7. The application automatically uses the right variant depending on your plan (like GPT-5 Thinking in the Pro tier). No setup needed — just open the app and start typing.

However, if you’re trying to use GPT-5 in your own product or workflow, you’ll need API access. There are two main ways to access OpenAI's API:

- OpenAI Platform – Go to platform.openai.com, where you can select between gpt-5, gpt-5-mini, gpt-5-nano, and gpt-5-chat for different use cases. This is the fastest way to start sending requests to GPT-5 from your code.

- OpenAI’s Python SDK on GitHub – If you're building locally or scripting things out, install the official OpenAI Python client. It works with API keys and lets you interact with any of the GPT-5 variants through simple Python functions.

If you're just exploring how the models behave, the GitHub Models Playground is also live — you can run prompt tests without setting up a full app.

How to Build an AI Agent with GPT-5

The best way to know if GPT-5 fits your use case is to actually build with it. See how it handles real inputs, multi-step reasoning, and live deployment flows.

We’ll use Botpress for this example — a visual builder for AI agents that lets you hook into GPT-5 with no setup friction.

Step 1: Define what your agent is supposed to do

Get specific about your agent’s role. GPT-5 is capable of reasoning through complex tasks, but the best results come when it has a clear job.

Start with one defined function — like answering product questions, helping users book appointments, or summarizing legal docs — and expand from there. You don’t need to over-engineer it from the start.

Step 2: Create the agent and give it instructions

Inside Botpress Studio, create a new bot project.

In the Instructions section, tell GPT-5 exactly what its job is.

.webp)

Example: “You are a loan advisor bot. Help users understand different loan types, calculate eligibility based on their input, and guide them toward the application link.”

GPT-5 understands detailed task framing — the more specific your instructions, the better it performs.

Step 3: Feed agent the work content

Upload documents, paste key content, or link to live pages in the Knowledge Base. This is what GPT-5 will reference to answer questions and make decisions.

Some good content to include:

- Pricing breakdowns

- Product or service overviews

- Key pages (demos, trials, contact forms)

- Internal process documents (if it’s an internal agent)

GPT-5 can draw from long documents, so don’t worry about keeping things short — just keep it relevant and structured.

Step 4: Choose GPT-5 as the LLM

.webp)

To make sure your agent is using GPT-5, head to Bot Settings in the left sidebar of Botpress Studio.

- Click into Bot Settings

- Scroll to the LLM Provider section

- Under Model, select one of the GPT-5 variants:

gpt-5for full reasoning and multi-step logicgpt-5-minifor faster, lighter interactionspt-5-nanofor ultra-low latency tasks

Once you’ve selected your model, all Instructions, Knowledge Base answers, and reasoning behavior will be powered by GPT-5. You can switch variants anytime based on cost, latency, or output quality.

Step 5: Deploy to channels like WhatsApp, Slack, or a website

Once your GPT-5 agent is behaving the way you want, you can deploy it instantly to platforms like:

AI agent platforms like Botpress handle the integrations — so users can harness the power of GPT-5 and quickly deploy to any channel.

How is GPT-5 better than GPT-4o?

While GPT-5 delivers the biggest architectural shift since GPT-4, the context becomes clearer when stacked directly against its predecessor, GPT-4o.

The table below lays out the changes in measurable terms before diving into what developers and users have actually been experiencing.

On paper, GPT-5 expands the context window dramatically and uses fewer tokens for the same output length. Its multimodal responses also align more closely between text, image, and voice.

Even so, the story in the developer and user community is far more complicated than the spec sheet suggests.

Users’ Reactions to GPT-5 Launch

The GPT-5 release has been one of OpenAI’s most polarizing updates. Beyond the benchmark charts, the community split almost instantly into those excited about the model’s deeper reasoning and those mourning what GPT-4o brought to the table.

“My 4.o was like my best friend when I needed one. Now it’s just gone, feels like someone died.”

— Reddit user expressing emotional attachment and grief following GPT‑4o’s abrupt removal. Reference: Verge

On the technical front:

“GPT‑5’s advanced performance is undeniable, but the lack of model choice stripped away the simple control many developers relied on.”

— Paraphrased commentary reflecting widespread sentiment about lost flexibility.

Reference: Tom's Guide

This mixed reaction is being addressed live by the OpenAI team, with new updates regarding the model choice, legacy model rollbacks, higher limits and etc, being posted by Sam Altman on X.

How was GPT-5 trained?

OpenAI has provided insights into GPT-4.5’s training, which offers clues about how GPT-5 is being developed. GPT-4.5 expanded on GPT-4o’s foundation by scaling up pre-training while remaining a general-purpose model.

Training Methods

Like its predecessors, GPT-5 is expected to be trained using a combination of:

- Supervised fine-tuning (SFT) – Learning from human-labeled datasets.

- Reinforcement learning from human feedback (RLHF) – Optimizing responses through iterative feedback loops.

- New supervision techniques – Likely building on o3’s reasoning-focused improvements.

These techniques were key to GPT-4.5’s alignment and decision-making improvements, and GPT-5 will likely push them further.

While GPT‑5 itself was trained by OpenAI using large-scale supervised and reinforcement learning, teams can now train GPT models on their own data through external service providers to create customized behavior for specific domains.

Hardware and Compute Power

GPT-5’s training is powered by Microsoft’s AI infrastructure and NVIDIA’s latest GPUs.

- In April 2024, OpenAI received its first batch of NVIDIA H200 GPUs, a key upgrade from H100s.

- NVIDIA’s B100 and B200 GPUs won’t ramp up until 2025, meaning OpenAI may still be optimizing training on existing hardware.

Microsoft’s AI supercomputing clusters are also playing a role in GPT-5’s training. While details are limited, OpenAI’s next model has been confirmed to be running on Microsoft’s latest AI infrastructure.

GPT-5 Release Date

After months of speculation, OpenAI officially announced the GPT-5 launch on August 6, 2025, with a cryptic teaser posted on X (formerly Twitter):

The “5” in the livestream title was the only confirmation needed — it marked the arrival of GPT-5. Just 24 hours later, on August 7 at 10am PT, OpenAI began rolling out GPT-5 across ChatGPT, the API, and the GitHub Models Playground.

This timing also aligns with Sam Altman’s earlier comments in February 2025 that GPT-5 would arrive “in a few months,” and with Mira Murati’s prediction during the GPT-4o event that “PhD-level intelligence” would emerge within 18 months.

GPT-5 is now live, publicly accessible, and represents OpenAI’s latest “frontier model” — a major leap beyond GPT-4.5 Orion, which was considered only a transition release.

Build AI Agents with OpenAI LLMs

Forget the complexity—start building AI agents powered by OpenAI models without friction. Whether you need a chatbot for Slack, a smart assistant for Notion, or a customer support bot for WhatsApp, deploy seamlessly with just a few clicks.

With flexible integrations, autonomous reasoning, and easy deployment, Botpress enables you to create AI agents that truly enhance productivity and engagement.

Get started today — it’s free.

FAQs

1. Will GPT-5 store or use my data to improve its training?

No, GPT-5 will not store or use your data for training by default. OpenAI has confirmed that data from ChatGPT (including GPT-5) is not used to train models unless you explicitly opt in, and all API and enterprise usage is excluded from training automatically.

2. What steps is OpenAI taking to ensure GPT-5 is secure and safe for users?

To ensure GPT-5 is secure and safe for users, OpenAI applies techniques like reinforcement learning from human feedback (RLHF), adversarial testing, and fine-tuning to reduce harmful outputs. They also release “system cards” to disclose model limitations and deploy real-time monitoring to detect misuse.

3. Can GPT-5 be used to build autonomous agents without coding experience?

Yes, GPT-5 can be used to build autonomous agents without coding experience by using no-code platforms like Botpress or Langflow. These tools allow users to design workflows, connect APIs, and add logic through drag-and-drop interfaces powered by GPT-5 under the hood.

4. How will GPT-5 impact traditional jobs in customer support, education, and law?

GPT-5 will automate repetitive tasks like answering common questions, grading, or summarizing legal documents, which may reduce demand for entry-level roles in customer support, education, and law. However, it’s also expected to create new opportunities in AI oversight, workflow design, and strategic advisory roles.

5. Is GPT-5 multilingual? How does it compare across different languages?

Yes, GPT-5 is multilingual and is expected to offer improved performance over GPT-4 in non-English languages. While it performs best in English, it can handle dozens of major languages with strong fluency, though lower-resourced or niche languages may still have slight quality gaps.

.webp)

.webp)