- Botpress offers transparent pricing with no hidden AI fees, letting your AI costs reflect only your real usage.

- Caching AI responses can cut query costs by around 30% without hurting user experience.

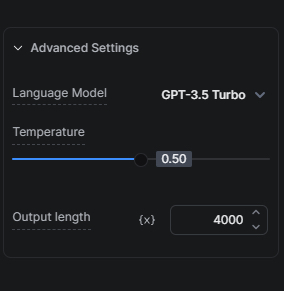

- Choosing the right AI model, like starting with GPT-3.5 Turbo instead of GPT-4, is crucial for balancing cost and quality.

Many businesses face the challenge of leveraging the potential of AI technologies without overspending. We understand the importance of this balance and are committed to providing solutions that allow our users to cost-effectively leverage AI.

Our approach to AI Cost

First, it’s important to understand two important components of how we reduce AI-related costs for our users while still offering the benefits of AI capabilities.

Transparent Pricing: No Hidden Fees

We don’t add any margins on AI-related tasks. This means your AI Spend cost is directly related to your actual usage without any additional AI fees from our side.

Caching AI Responses

Caching is one of our most effective strategies to cut bots’ AI costs. By caching AI responses, we reduce the number of requests to the LLM provider which can reduce the cost of queries by approximately 30% saving you money without compromising the quality of interactions of the bot with your users.

Tips to optimize AI Cost

Now that we’ve seen two of the approaches we take to lower our users’ AI Spend let’s look at tips you can use while building your bot to further lower its AI cost.

Optimize your Knowledge Bases

Optimizing your Knowledge Bases (KBs) can greatly influence your AI Spend since KBs are usually the biggest AI cost driver in a Botpress project.

Tip 1: Choose the Right AI Model

The choice of an AI model significantly impacts cost. Since GPT-3.5 Turbo is faster & cheaper than GPT-4 Turbo, we recommend thoroughly testing your setup with GPT-3.5 Turbo before considering an upgrade to more advanced versions.

Our KB Agent hybrid mode offers an excellent middle ground, as we initially use GPT-3.5 Turbo to attempt a response to a query and escalate to GPT-4 Turbo only if necessary.

Tip 2: Shield Your KB

You can reduce your AI Spend by shielding your KB from unnecessary typical FAQs that don’t need AI or smart answering with a Find Records card. This is how it works: if you know that users typically ask one question and we have 50 well-known questions with their answers, we can add them to a table and query that table using a Find Records card. In case we don’t find an answer, only then do we look in a KB.

Tip 3: Properly Scope your KBs

Depending on the type of information and the quantity of information that you want to add to a KB, it is usually best practice to do two things in parallel to cut AI Spend cost. First, organize your information into smaller KBs, with each KB scoped to a specific product/feature/topic. Second, drive the user through a workflow with multiple questions to scope down your search to a specific KB; this will not only reduce the cost, but it will also yield better results.

Tip 4: Website KB Data Source vs Search the Web KB Data Source

If you use a website as your KB data source but don’t make constant changes to the website that need to be reflected to your bot in real time then a good cost effective alternative is to use the Search The Web as your KB data source instead of the Website KB data source. Before making that transition, make sure to test that the performance on the questions you anticipate being asked is not degraded with this switch.

Tip 5: Query Tables with Find Records or Execute Code card

If you have a Table with data you want to query, consider using the Find Records card instead of using the Table in a KB. For those with technical expertise, executing code can be an even more cost-efficient method of querying a Table. You do so by querying the Table directly from the Execute Code card and storing the output in a workflow variable that you can refer to later.

Tip 6: Control The Chunks

By chunks I'm referring to the number of chunks that will be retrieved from the Knowledge Base to generate an answer. Generally the more chunks retrieved, the more accurate the answer – but it will take longer to generate and cost more AI tokens. Experiment with chunk size to establish the lowest amount that still leads to accurate responses.

Use Execute Code Card to lower AI Spend cost

The Execute Code card can be a suitable, cost-effective replacement for some AI cards. Here are a few scenarios where you can consider using them:

Smarter Message Alternatives

If you want your bot to send a different AI response for the same query every single time, you must prevent caching (see Appendix to learn how). There are scenarios where the increase in AI Spend can be justified by the improvement to the conversation experience. But this isn’t always the case.

Think of something like a simple greeting that’s generated with LLMs. With each greeting you will incur an additional AI Spend cost. Is it worth it? Probably not. Fortunately, there’s a cost-effective workaround: use an array with multiple responses and a simple function to randomly fetch a value and present it.

Depending on the conversation volume, the amount you save by implementing this method can be well worth the effort.

You can find more details on how to implement alternative messages here.

Code Execution for Simple Tasks

For simple tasks, such as data reformatting or extracting information from structured data, using the Execute Code card can be more efficient, cheaper and faster than relying on an LLM.

Alternatives to Summary Agent

You can use Execute Code cards to create your own transcript. Place an Execute Code card wherever you want to track the users’ and bot’s message in an array variable. Afterwards, you can use that array and feed it as context to your KB.

Simplify When Possible

Opt for the simpler interaction method that accomplishes the same goal without degrading user experience. For example, if you’re interested in collecting user feedback a simple star rating system with comments will be more cost-effective than using AI to collect the same information.

Tips for AI Tasks, AI Generate Text, and Translations

Choose the Right AI Model

Yes, choosing the right AI model is so important that it’s worth mentioning twice. Similar to KBs, the choice of an AI model significantly impacts cost when it comes to AI Tasks. Opt for GPT-3.5 Turbo for less complicated instructions. Before considering an upgrade to more advanced versions, thoroughly test your setup with this model. Remember, GPT-4 Turbo costs 20x more than GPT-3.5 Turbo. Unless the results are considerably better, opt for GPT–3.5 Turbo.

In addition to the above, you can also conserve AI Spend by reducing the number of tokens consumed in each AI Task run.

My recommendation is to be conscious about decreasing this number because it will result in any additional tokens to be truncated. For example, if you limit the length to 2000 tokens and your prompt plus your output is more than 2000 tokens, then your input will be truncated accordingly.

AI Task vs AI Generate Text

For simple text outputs, the AI Generate Text card uses fewer tokens and is easier to set up than the AI Task card. For tasks involving parsing information, the AI Task card outperforms the AI Generate Text card.

Therefore, my recommendation is to use the AI Task card when you want to use AI to process information (e.g. if you want to detect the user’s intention or if you want the AI to analyze the input). But, if you want to leverage AI to generate text, then use the AI Generate Text card instead (e.g. if you want to take a KB answer and expand it or if you want to generate a question creatively).

For a deeper dive into the differences between the AI Task card and AI Generate Text card, learn more here.

Translations

If your bot is going to be handling a high amount of multilingual conversations, consider integrating hooks with external translation services for a more cost-effective option.

You can find more information about hooks here.

Conclusion

With these strategies and tips, you’ll be able to optimize your AI Spend in Botpress. Understanding the cost implications of different tasks and choosing the most efficient methods for your needs will reduce your AI-related expenses without compromising performance.

Our team is here to help you navigate these options and ensure your bot delivers the best possible experience for your users at the most efficient cost. Visit our Pricing page for more information or visit our Discord server for help.

Appendix

How to Prevent Caching

If you want to overcome caching to always get live results, you can do either of the following options:

- For more permanent caching prevention: add `And discard:{{Date.now()}}` in all your AI-related cards (e.g., in the AI Task prompts, in the KB context, etc.).

- For temporary caching prevention: publish your bot and test it from an incognito window.

Note: all things being equal, by removing this caching layer and not making any other changes to your bot, the AI Spend cost will increase.

Recommended Courses

- ChatGPT Prompt Engineering for Developers (although the title says for developers, non-developers will benefit too!)

- Building Systems with the ChatGPT API

.webp)