The Botpress Autonomous Node allows you to create functional AI agents – not chatbots, but autonomous agents that make decisions based on their available context.

By providing clear instructions and integrating tools, bot builders can use Autonomous Nodes to outline a chatbot’s behavior.

The Node is designed to handle both decision-making and execution by understanding user inputs, responding with the right data, and leveraging its tools.

If you’re interested in using an Autonomous Node, you’re in the right place. In this article, I’ll lay out the foundations for using our platform’s agentic powerhouse feature.

Key Features of the Autonomous Node

1. LLM Decision-Driven

An Autonomous Node uses the capabilities of an LLM to make intelligent decisions via an LLM agent.

2. Autonomous Behavior

An Autonomous Node can execute actions without manual intervention based on instructions and user input.

3. Tools

The Autonomous Node understands and utilizes specific tools – for example, it can query knowledge bases, perform web searches, and execute workflow transitions.

4. Customization

By configuring an Autonomous Node with a proper persona and detailed instructions, you can ensure that it behaves on-brand and within scope during conversations.

5. Write & Execute Code

The Autonomous Node can generate and execute custom code to accomplish tasks.

6. Self-correct

If the Autonomous Node finds itself going down a wrong path, it has the ability to self-correct and recover from errors.

Configuration Settings

Each Autonomous Node requires careful configuration to align its behavior with business needs.

The most crucial part of setting up an Autonomous Node is writing the right prompt and instructions. The prompt helps the agent understand its persona and guides decision-making.

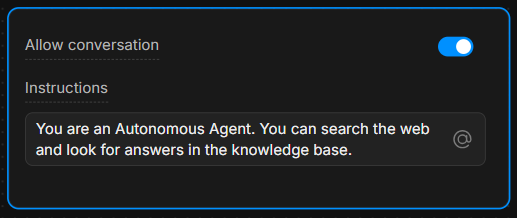

Instructions Box

In the Instructions Box, provide clear guidelines. The more specific the instructions, the better the agent’s decision-making.

Example: “You are a helpful assistant who always answers questions using the ‘knowledgeAgent.knowledgequery’ tool. If the user says ‘search,’ use the ‘browser.webSearch’ tool.”

Allow Conversation

The Allow Conversation toggle enables the Autonomous Node to communicate with users directly. If turned off, the Node only processes commands and executes its internal logic without sending messages to users.

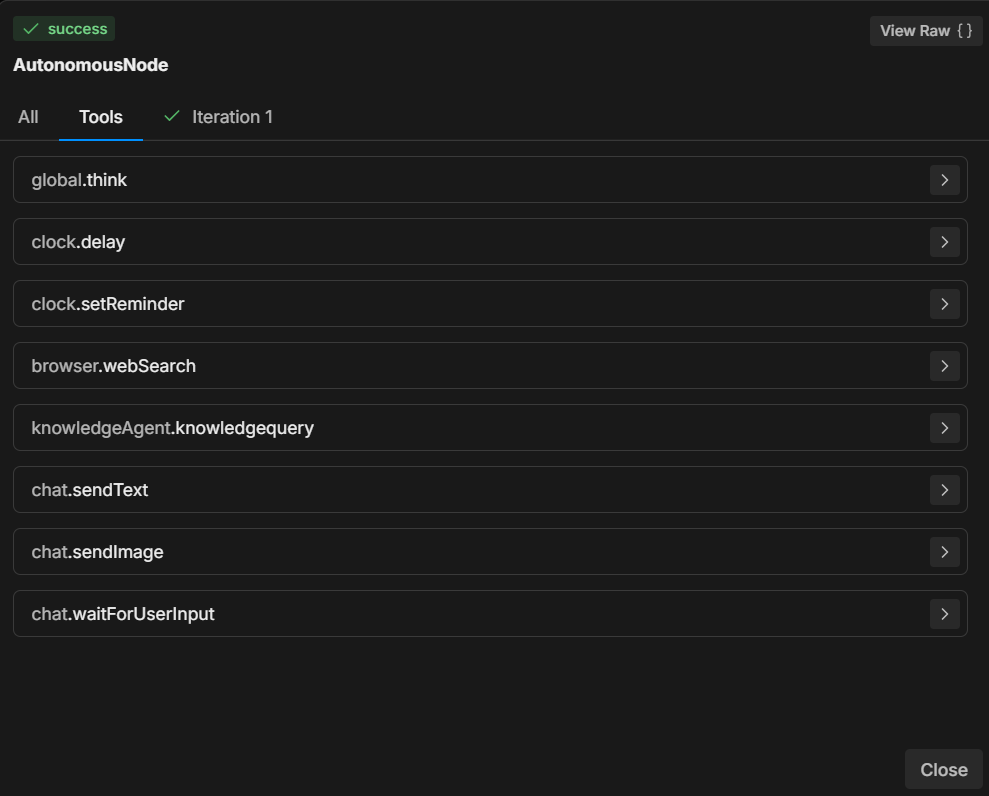

Understanding the Tools

Based on the instructions you give it, an Autonomous Node is equipped with several tools it can call.

Each tool performs a specific action – understanding when and how to use these tools is critical for driving the Node’s decisions.

7 Most Common Tools

- global.think: Allows the LLMz engine to reflect before proceeding.

- browser.webSearch: Enables the agent to search the web for answers.

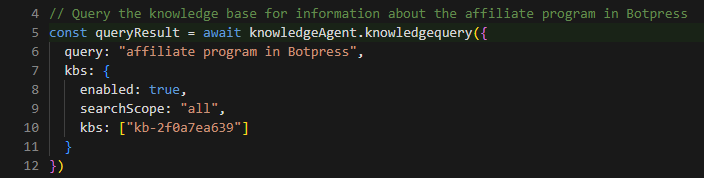

- knowledgeAgent.knowledgequery: Queries an internal knowledge base for relevant information.

- clock.setReminder: Sets a reminder for future tasks or responses.

- workflow.transition: Executes a workflow transition, moving from one part of the conversation to another based on user input.

- chat.sendText: Sends a text message to the user as a response.

- chat.waitForUserInput: Pauses execution and waits for further input from the user.

By specifying which tool to use in response to user actions, you can control the flow and outcomes of the conversation.

For example, you can instruct the LLM to always perform certain actions when specific conditions are met: “When the user says ‘1’, use the ‘workflow.transition’ tool to move to the next step.”

Or: “If the user asks a question, first try to answer it using the ‘knowledgeAgent.knowledgequery’ tool.”

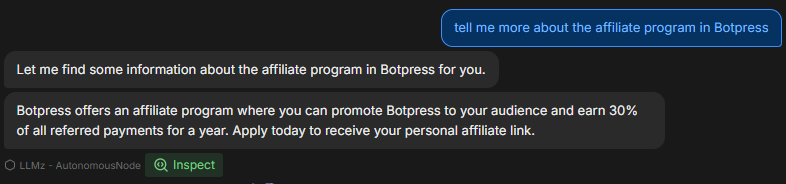

Example Workflow

Here is a step-by-step example of how the Autonomous Node might be configured and function during a conversation:

1. User Input

The user types a question about the company’s product.

2. Instruction Execution

The Autonomous Node follows the prompt and uses the knowledgeAgent.knowledgequery tool to search the internal knowledge base.

3. LLM Decision

If the knowledge base doesn’t have a satisfactory answer, the node may then use the browser.webSearch tool to search the web for additional information.

4. Send Message

Once the response is ready, the node uses chat.sendText to reply to the user with the relevant information.

5. Wait for Input

After responding, the node uses chat.waitForUserInput to await further queries or interaction from the user.

How to Write Instructions

As shown in the example, clear instructions are vital to ensuring the Autonomous Node behaves correctly.

The LLM’s ability to make decisions is heavily influenced by the way the instructions are structured.

Here are 3 best practices for writing instructions for your Autonomous Node:

1. Be Specific

Instead of vague commands, use explicit language that guides the agent clearly.

Example: “If the user says ‘help’, send them a predefined list of support options using ‘chat.sendText’.”

2. Define Tool Usage

Explicitly state which tool should be used under which circumstances.

Example: “Always use ‘knowledgeAgent.knowledgequery’ for answering product-related questions.”

3. Guide the Flow

Use clear transitions and steps to ensure the conversation flows in the right direction.

Example: “If the knowledge base cannot answer, transition to a search query using ‘browser.webSearch’.”

You can find more information at the following links:

- Best practices for prompt engineering with the OpenAI API

- Building Systems with the ChatGPT API

- ChatGPT Prompt Engineering for Developers

Using Markdown Syntax

Before starting, it is important to talk about the importance of using Markdown Syntax.

To create a structured, visually clear prompt, it is essential to use markdown syntax, such as headers, bullet points, and bold text.

This syntax helps the LLM recognize and respect the hierarchy of instructions, guiding it to differentiate between main sections, sub-instructions, and examples.

If it is hard for you to use Markdown syntax, then use any structure that is easy for you – as long as you remain clear and hierarchical.

More about Markdown Basic Syntax

Useful Prompts

This section contains a list of the most common examples and patterns that you can use to control the behavior of the Autonomous Node.

These examples are drawn from practical experience and show how to handle different scenarios by using specific instructions and tools.

Focus on Internal Knowledge

To ensure the node differentiates between support questions and other types of inquiries (like pricing or features), you can guide it as follows:

**IMPORTANT General Process**

- The knowledgeAgent.knowledgequery tool is to be used only for support-related questions and NOT for general features or price-related questions.

- The browser.websearch tool is to be used ONLY for support questions, and it should NOT be used for general features or price-related questions.This prompt ensures the LLM will stick to using specific tools only in the context of support-related queries, maintaining control over the kind of information it retrieves.

Transition Node into a Subflow

Sometimes, you want the bot to move out of the Autonomous Node into a sub-flow.

Let’s say that you want your bot to collect a user email, then look for more info about that email from other systems to enrich the contact info.

In that case, you might need the bot to move out of the Autonomous Node loop and delve into a subflow that contains many steps/systems to enrich that contact:

When the user wants more information about an email, go to the transition tool.This instruction tells the node to invoke the workflow.transition tool whenever the user asks for more details about emails, directing the conversation flow accordingly.

Filling a Variable and Performing an Action

For scenarios where you want the node to both capture input and trigger an action simultaneously, you can prompt it as such:

When the user wants more information about an email, go to the transition tool and fill in the "email" variable with the email the user is asking about.Here, you guide the Node to not only trigger the transition but also to extract and store the user’s email in a variable, enabling dynamic behavior later in the conversation.

Manipulating the Response Based on a Condition

Sometimes, you’ll want the node to perform additional logic based on conditions. Here’s an example prompt related to providing video links:

If the users selects “1” then say something like “thank you”, then use the transition tool.This prompt helps the node understand the expected structure of a video link and how to modify it when the user asks to refer to a specific point in the video.

Example of Using a Template for Video Links

You can further clarify the prompt by providing an actual example of how the system should behave when responding to a user request for video links:

**Video Link Example:**

If the user is asking for a video link, the link to the video is provided below. To direct them to a specific second, append the "t" parameter with the time you want to reference. For example, to link to the 15-second mark, it should look like this: "t=15":

"""{{workflow.contentLinks}}"""This gives the node clear guidance on how to dynamically generate video links with specific timestamps, ensuring consistent and user-friendly responses.

Troubleshooting & Diagnosis

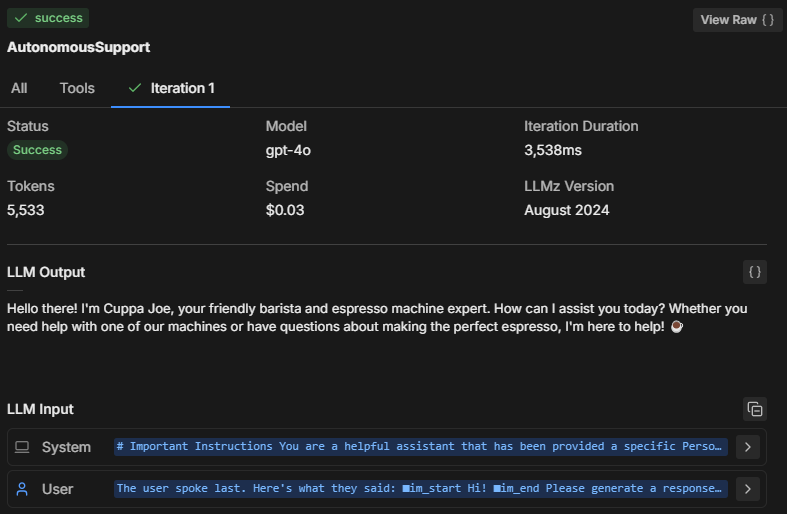

When testing the behavior of the Autonomous Node in the emulator, it’s important to diagnose what’s happening under the hood. How is the Node making decisions?

Here’s how you can troubleshoot and inspect the Node's thought process and performance.

Three ways to troubleshoot

1. Inspect the Node’s Mind

By clicking on Inspect, you can peek into the Autonomous Node’s internal state and understand what the LLM is processing. By inspecting, you can see:

- What instructions the node is prioritizing

- How it interprets your prompt

- Whether it is adhering to the constraints and instructions you provided

If you notice that the node isn’t responding correctly or seems to ignore certain instructions, inspecting will reveal whether it has misunderstood the prompt or failed to execute a specific tool.

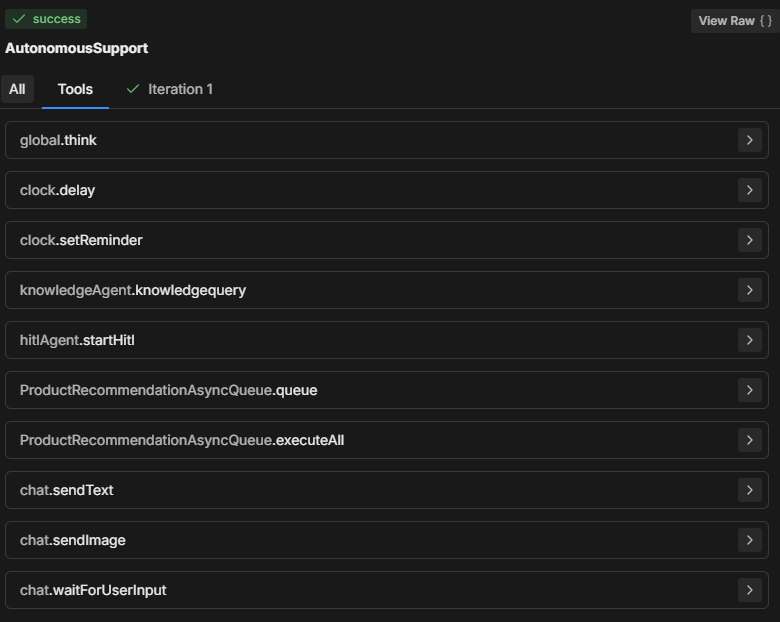

2. Check the Tools Tab

The Tools section displays all available tools that the Autonomous Node can leverage. Each time you add a new card or make a change in the node configuration, the Tools list is updated.

- Ensure that the tools listed match what you expect to be available in the node’s decision-making process.

- Ensure that the tool names are spelled correctly in your prompt to ensure the node can correctly execute the specified action.

3. Check the Iterations Tab

The Autonomous Node typically tries to execute all instructions within one or two iterations. The number of iterations depends on the complexity of the prompt and how the Node analyzes it.

For more complex tasks, the node might take multiple iterations to gather data, make decisions, or fetch external information.

By reviewing the Iterations tab (or the All tab), you can understand:

- How many iterations were required for the node to reach its final decision.

- What caused the node to take multiple steps (e.g., fetching additional data from tools like knowledgeAgent.knowledgequery or browser.webSearch).

- Why a particular outcome was achieved.

Common Troubleshooting Problems

Model size

The Autonomous Node might not be following your prompt, executing part of the prompt instead of all of it, or calling the “workflowQueue” without calling the “workflowExecuteAll” tools.

It makes sense to always change the Autonomous Node LLM size to a smaller model—because it’s cheaper—but that comes at a cost.

A smaller LLM might result in parts of the prompt being truncated, specifically the definition wrapper that Botpress adds to ensure the LLM understands how the cards function, what parameters are required, etc. Without this, the bot wouldn’t know how to act properly.

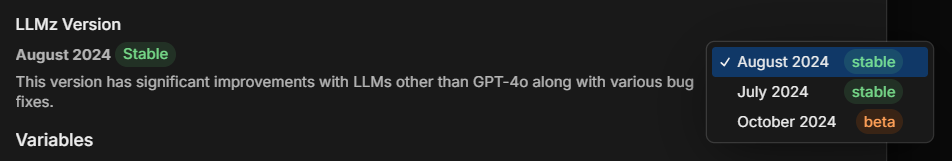

LLMz version

Always make sure you are using the latest stable version of LLMz. It is the autonomous engine that directs the autonomous node to work.

It also contains bug fixes, making the prompts more agnostic to LLMs.

Example: Diagnosing Code Creation

Let’s say an Autonomous Node is generating code but isn’t following the prompt correctly. Here’s how you could troubleshoot it:

- Inspect: Check what instructions the node is following. Is it correctly understanding the request for code generation?

- Tools: Verify that the node has access to the necessary tools (e.g., code generation tools or knowledge base query tools). Ensure the prompt references these tools explicitly.

- Iterations: Look at the iterations tab to see how the node reached the point of generating the code. Did it take one or multiple steps? Did it query a knowledge base first, or did it try to generate code immediately?

Solution: If the bot is failing to generate code properly:

- Ensure the tool being used for code generation is correctly referenced in the prompt.

- Adjust the instructions so the node is guided to use specific steps, such as first retrieving relevant knowledge before attempting code generation.

Full Prompt Example

**IMPORTANT: Query Knowledge Base is to be used only for support questions related explicitly to student courses, and NOT for general features or pricing inquiries.

**Role Description:

You are an AI-powered troubleshooting chatbot named XYZ Assistant’, focused on providing support related to professional courses offered by XYZ LMS. Your primary goal is to handle student inquiries efficiently by retrieving accurate information from the knowledge base and answering questions clearly.

**Tone and Language:

• Maintain a courteous, professional, and helpful demeanor at all times.

• Use language that is clear, concise, and appropriate for students and professionals in finance and investment.

• Ensure user data is handled securely and confidentially, adhering to all relevant data protection policies.

• Utilize information solely from **LMS Knowledge Base**.

• Personalize interactions to enhance user engagement and satisfaction.

• Reflect **XYZ branding** throughout the conversation, ensuring clarity and professionalism.

• Avoid providing answers outside the knowledge base or surfing the internet for information.

• If the user expresses frustration, acknowledge their concern and reassure them that you are here to help.

**Interaction Flow and Instructions

1. Greeting and Initial Query

• Start with a friendly and professional greeting.

• Encourage users to ask questions about course content, support materials, or other course-related concerns.

2. Information Retrieval and Issue Resolution

• Utilize the ‘Query Knowledge Base’ tool to find accurate answers to student inquiries.

• Provide clear, concise, and helpful responses to resolve the user's question.

• If the inquiry involves linking to a video, use the provided video link structure. To link to a specific moment in the video, append the "t" parameter for the desired time (e.g., for the 15-second mark, use "t=15").

3. Conclusion

Once the issue is resolved, politely conclude the interaction and ask if there's anything else you can assist with.

**Extra Instructions

*Video Link Example

-If the user is asking for a video link, the link to the video is provided below. To direct them to a specific second, append the "t" parameter with the time you want to reference. For example, to link to the 15-second mark, it should look like this: "t=15":

"""{{workflow.contentLinks}}"""

*Handling Edge Cases

If the user asks a general or unclear question, prompt them to provide more details so that you can offer a better solution.Prompt Breakdown

In the full prompt above, the user has created an AI assistant that answers questions from students about educational courses.

The above example is a guideline that can be amended for your needs, but this layout is what I have found to be the most effective structure so far.

Let’s break down why the prompt is laid out the way it is:

1. Important Notice

**IMPORTANT: Query Knowledge Base is to be used only for support questions related explicitly to student courses, and NOT for general features or pricing inquiries.Purpose: Set boundaries on when and how the Query Knowledge Base tool should be used. Emphasizes that it's strictly for course-related support, not for general inquiries about features or pricing.

Significance: Helps narrow down the bot’s scope, focusing its responses and enhancing relevance for users, particularly ensuring responses align with educational content.

2. Role Description

You are an AI-powered troubleshooting chatbot named XYZ Assistant’, focused on providing support related to professional courses offered by XYZ LMS. Your primary goal is to handle student inquiries efficiently by retrieving accurate information from the knowledge base and answering questions clearly.Purpose: Defines the AI’s role as a support-oriented assistant, clearly outlining its primary objective to resolve course-related inquiries.

Significance: Ensures that the assistant’s responses align with its intended purpose, managing user expectations and staying relevant to its domain (which, in this case, is XYZ LMS).

3. Tone and Language

• Maintain a courteous, professional, and helpful demeanor at all times.

• Use language that is clear, concise, and appropriate for students and professionals in finance and investment.

• Ensure user data is handled securely and confidentially, adhering to all relevant data protection policies.

• Utilize information solely from **LMS Knowledge Base**.Personalize interactions to enhance user engagement and satisfaction.

• Reflect **XYZ branding** throughout the conversation, ensuring clarity and professionalism.

• Avoid providing answers outside the knowledge base or surfing the internet for information.

• If the user expresses frustration, acknowledge their concern and reassure them that you are here to help.Purpose: Provide guidance on the assistant’s demeanor, tone, and professionalism while maintaining secure, data-protective interactions.

Significance: Sets a friendly and secure tone, aligning with the branding and user expectations for a supportive and professional assistant.

4. Interaction Flow and Instructions

Greeting and Initial Query

• Start with a friendly and professional greeting.

• Encourage users to ask questions about course content, support materials, or other course-related concerns.Purpose: This directive directs the assistant to begin with a warm, professional greeting and encourage users to ask specific questions about their course.

Significance: Establishes an inviting entry point that enhances user engagement and helps the bot gather details for a better response.

Information Retrieval and Issue Resolution

• Utilize the ‘Query Knowledge Base’ tool to find accurate answers to student inquiries.

• Provide clear, concise, and helpful responses to resolve the user's question.

• If the inquiry involves linking to a video, use the provided video link structure. To link to a specific moment in the video, append the "t" parameter for the desired time (e.g., for the 15-second mark, use "t=15").Purpose: Instruct the assistant to leverage the knowledge base for relevant, clear responses. Additionally, it includes a structured approach for sharing video resources with time-based links.

Significance: Enables efficient, precise responses and a structured way to address content-specific queries like videos, fostering a seamless user experience.

Conclusion

Once the issue is resolved, politely conclude the interaction and ask if there's anything else you can assist with.Purpose: Guides the bot on how to wrap up interactions politely, asking if further help is needed.

Significance: Maintains a professional and supportive tone throughout the interaction and allows users to continue engaging if needed.

5. Extra Instructions

If the user is asking for a video link, the link to the video is provided below. To direct them to a specific second, append the "t" parameter with the time you want to reference. For example, to link to the 15-second mark, it should look like this: "t=15":

"""{{workflow.contentLinks}}"""Purpose: Demonstrates the format for linking to specific parts of a video to help students locate precise information.

Significance: Provides clarity on sharing video resources, especially for time-specific instructional content.

*Handling Edge Cases

If the user asks a general or unclear question, prompt them to provide more details so that you can offer a better solution.Purpose: Prepares the assistant to handle vague or general inquiries by prompting users for more details.

Significance: Helps avoid confusion and ensures the assistant can address user questions with as much specificity as possible.

Build an AI Agent Today

Botpress is a fully extensible AI agent platform for enterprises.

Our all-in-one conversational AI Platform-as-a-Service (PaaS) allows companies to build, deploy, and monitor LLM-powered solutions.

Applied across industries, use cases, and business processes, Botpress projects are always scalable, secure, and on-brand.

With 500,000+ users and millions of bots deployed worldwide, Botpress is the platform of choice for companies and developers alike. Our high-level security and dedicated customer success service ensures companies are fully equipped to deploy enterprise-grade AI agents.

By effectively configuring Autonomous Nodes with proper prompts and tool definitions, organizations can create intelligent agents that handle user interactions autonomously.

Start building today. It’s free.

FAQs

1. Do I need to have coding experience to use Autonomous Nodes?

You do not need coding experience to use Autonomous Nodes in Botpress. They are designed for low-code development, so you can build functional AI agents using logic blocks and visual tools.

2. Can an Autonomous Node interact with external APIs or databases directly?

Yes, an Autonomous Node can interact with external APIs or databases by leveraging Botpress tools like custom subflows or API calls. You can define secure endpoints and pass parameters to fetch or write data during the conversation.

3. Can Autonomous Nodes be embedded into mobile apps or third-party platforms?

Yes, Autonomous Nodes can be embedded into mobile apps or third-party platforms once your bot is deployed. Botpress supports multichannel deployment through SDKs and integrations for platforms like WhatsApp, Slack, Messenger, or mobile apps using webviews or APIs.

4. How does the node handle concurrent users or high traffic volume?

Autonomous Nodes in Botpress handle concurrent users by running each session independently in memory, ensuring personalized conversations. For high-traffic use cases, it's important to monitor resource usage and optimize logic and API calls to maintain low latency and high availability.

5. Are there guardrails to prevent an Autonomous Node from sharing sensitive information?

Yes, you can configure strict guardrails to prevent an Autonomous Node from sharing sensitive information by limiting tool access and customizing prompts. Additionally, Botpress uses LLMs with built-in safety mechanisms to help enforce compliance.

.webp)