- AI hallucination happens when models confidently produce false or made-up information, caused by poor data quality, overfitting, or ambiguous prompts.

- Hallucinations range from factual errors to fabricated content and can damage trust, cost businesses billions, or spread harmful misinformation.

- Key prevention steps include choosing reliable AI platforms, adding retrieval-augmented generation (RAG), crafting precise prompts, and including human oversight.

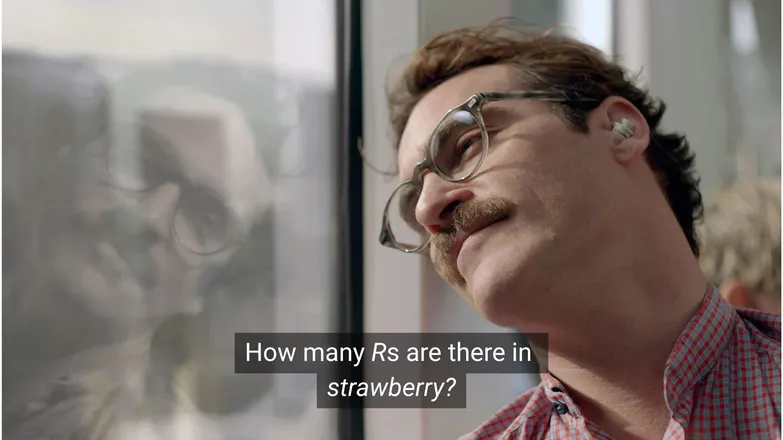

Do you think Joaquin Phoenix would have fallen in love with Scarlett Johansson if he had asked her how many Rs are in strawberry? If you’re on LinkedIn, you know that the answer is 3. (Or, you know, if you can read.)

But to AI chatbots, it isn’t always so simple.

You’ve probably seen people poking fun at the absurdity of AI hallucinations. And to be fair, an AI model of seemingly endless knowledge, human-like reasoning skills and lightning-fast task execution failing at a kindergarten-level math problem is, well, kind of absurd.

But behind the fun and games lies a more serious — and potentially insidious — reality.

In this article, I’m going to talk about AI hallucinations– what they are, what causes them, why they matter, and measures you can take to prevent them.

What is AI hallucination?

AI hallucination is when an AI model presents information that is inaccurate, misleading, or entirely fabricated. This false information can appear plausible, and in many cases, go undetected.

Because of LLMs’ widespread adoption, hallucinations are most often referred to in the context of generative text models. In reality, they pose a risk for any application of Generative AI.

What causes hallucination in AI?

AI hallucination happens when models learn false patterns.

Patterns, in the context of AI, refer to its ability to use individual training examples to generalize across unseen data. This can be a series of words to form the continuation of a text, or the distribution of image pixels that correspond to a dog.

In the case of LLM hallucination, the model has deemed a series of words as the most probable follow-up to the user’s prompt, although it’s false.

This could could be due to one or more of the following reasons:

Low-Quality Training Data

ChatGPT and similar LLMs are trained on loads of data. This data, plentiful as it may be, is imperfect due to:

- Gaps in certain topics

- Reflecting real-world prejudice

- Deliberate misinformation or unmarked satire

- Biased, as in imbalance or “lop-sided” data.

Consider a scenario where the model was trained on information about all but one Greek god.

Its ability to draw statistical connections between Greek-mythology-like topics– love, ethics, betrayal– could cause it to string together some made-up mythology that it deemed “likely”, given its statistical model.

This is also apparent in image generation, where most prompts for a female subject produce hyper-sexualized images. The bias towards one particular type of depiction conditions the kinds of images that are generated.

The spelling of strawberry has likely occurred in the training data in context of a discussion of the double R, a notorious pain-point of non-native English speakers. In this case, the number 2 or the word “double” are likely to have come up in connection with the spelling of the word.

On the other hand, it’s unlikely that the data would have made mention of it having 3 Rs.

The output is absurd because the prompt is: under what circumstance would someone write a word and then inquire about how it is spelled?

Model Architecture and Generation Method

The models are built from staggeringly complex neural network architectures. Slight variations impact the way models interact with their training data and input prompts. A model’s capacity to mitigate hallucination is subject to incremental improvement through rigorous research and testing.

On top of that is how generation is implemented. Word-by-word (actually word-piece), models predict the most likely word to follow. So:

“The quick brown fox jumps over the lazy ___.”

Will determine the most likely next word to be “dog”. But other words are possible. And generation based solely on determining the single most likely next word produces uninteresting, predictable results.

That means creative sampling methods have to be employed to keep responses exciting-yet-coherent. In doing so, factuality sometimes slips through the cracks.

Overfitting

Overfitting is when the model is trained to predict the data so closely that it fails to generalize to new inputs.

So, if I were a model (as my mom says I should be), then I would be a properly trained one if I were to recognize dogs as:

Furry, having droopy ears, playful, and a little brown button nose.

But I would be overfit if I only recognized them as:

Having a brown dot under its chin, answers to the name “Frank”, and totally chewed up my good pair of Nikes.

In the context of an LLM, it usually looks like regurgitating information seen in the training data, instead of backing off where it doesn’t know the answer.

Say you ask a chatbot for a company’s return policy. If it doesn’t know, it should inform you. Though if it’s overfit, it might return a similar company’s policy.

Poor Prompting

Companies are issuing certificates in prompt engineering with the knowledge that AI is only as good as its inputs.

A well formed prompt is precisely articulated, avoids niche terminology, and provides all the necessary context.

This is because hallucination happens on the edge of many low-probability outputs.

Say you ask “what’s the plot of shark girl?” Now, a human thinks “huh, shark girl.” In statistics world, the possibilities are:

- The Adventures of Sharkboy and Lavagirl – a pretty popular kids’ movie from 2005 with a similar name.

- A 2024 horror/thriller called Shark Girl – less popular but more recent and accurate.

- A children’s book with the same name from earlier this year – which the model may or may not have indexed.

None of these are the obvious choice, resulting in a “flatter” probability distribution with less commitment to one topic or narrative. A more effective prompt would provide context, i.e. articulate which example the user is referring to.

This soup of ambiguity and tangential relevance may produce a response that’s just that: a made-up generic plot to a shark-related story.

Lowering your chance of hallucinations is about lowering uncertainty.

Types of AI Hallucinations

So far I’ve talked about hallucinations in broad strokes. The reality is that it touches on nearly all aspects of AI. For the sake of clarity, though, it’s best to consider the different categories.

Factual Errors

This is where the strawberry example fits in. There are errors in details of otherwise factual statements. These could include the year a certain event took place, the capital of a country, or numbers of a statistic.

Minute details in an otherwise good response can be particularly insidious, especially when it comes to details that humans don’t often remember, like exact numbers.

Fabricated Content

In 2023, Google’s Bard falsely claimed that the James Webb telescope was used to take the first pictures of exoplanets. This wasn’t a matter of technical inaccuracies– it was just plain false.

These can be bold claims like above, but more often appear as URLs that go nowhere, or made-up code libraries and functions.

It’s worth noting that the line between factual errors and fabricated content isn’t always clear.

Say we’re discussing a researcher. If we cite a paper of theirs but get the year wrong it’s a factual error. If we get the name wrong, then what? What about the name and the year?

Misinformation

This can fall under either of the 2 previous categories, but refers to false information where the source is more transparent.

Google AI famously recommending glue pizza and eating rocks is a great example of this; the source material is obviously satirical and generally harmless– Reddit comments written by The Onion, but the model’s training hadn’t accounted for that.

Risks of AI Hallucinations

1. Loss of Trust

We appreciate the freedom of offloading our tasks to AI, but not at the expense of our trust.

Cursor AI’s recent mishap– a customer service bot inventing a restrictive policy – has led many users to cancel their subscriptions, questioning its reliability.

2. Cost

AI has taken the front seat in many businesses, and while that’s a good thing, a slip up can be costly.

Google’s James Webb hallucination caused a $100 billion drop in Alphabet’s stock over the course of a few hours. And that’s before the cost of re-training the models.

3. Harmful Misinformation

We laugh at the absurdity of glue pizza, but how about misleading medical doses?

I’ll be the first to trade in reading warning-ridden fine print for a quick answer from AI. But what if it’s wrong? It almost certainly won’t account for all possible medical conditions.

3. Security and Malware

As mentioned, AI often makes up the names of code libraries. When you try to install a non-existent library, nothing happens.

Now imagine a hacker embeds malware into code and uploads it under the name of a commonly-hallucinated library. You install the library, and 💨poof💨: you’re hacked.

This exists, and it’s called slopsquatting.

Gross name aside, it never hurts to be critical about what you’re installing, and double-checking any of the exotic sounding library names.

Steps to Prevent AI Hallucinations

If you’re not training the models, there’s little you can do on the data and architecture end.

The good news is that there are still precautions you can take, and they can make all the difference in shipping hallucination-free AI.

Choose a Model and Platform you can Trust

You’re not on your own. AI companies have every interest in maintaining trust, and that means no hallucinations.

Depending on what you’re doing with AI, you almost always have at least a few options, and a good AI platform makes this accessible. These platforms should be transparent about how they mitigate hallucinations.

Use RAG (Retrieval-Augmented Generation)

Don’t make the model rely on its own knowledge. Equipping your model with RAG makes it clear information is available and where to find it.

It’s best to run AI on a platform with simple instructions on how to implement effective RAG.

Add Thorough Instructions

If you’ve heard it once you’ve heard it a thousand times: garbage in, garbage out.

“Answer the user’s question” won’t guarantee success. However, something like:

# Instructions

Please refer exclusively to the FAQ document. If the answer does not appear there:

* Politely inform the user that the information is unavailable.

* Offer to escalate the conversation to a human agent.will keep your agent in check. Clear prompting with firm guardrails is your best defense against a rogue agent.

Human Verification

On the topic of escalation, having a person ready to inspect, evaluate, and squash AI’s shortcomings.

The ability to escalate, or retroactively verify conversations lets you figure out what works and what’s at risk of hallucination. Human-in-the-loop– human oversight of AI-driven workflows– is a must here.

Use Hallucination-Free AI Today

Uncertainty around AI’s reliability might be keeping businesses from digital transformation.

Botpress’ RAG capabilities, human-in-the-loop integration, and thorough security systems makes AI secure and reliable. Your agent works for you, not the other way around.

Start building today. It’s free.

FAQs

1. How can I tell if an AI response is a hallucination without having prior knowledge of the topic?

To tell if an AI response is a hallucination without prior knowledge, look for signs like extremely specific claims, overconfident language, or names, statistics, or citations that can’t be independently verified. When unsure, prompt the AI to cite its sources or cross-check with reliable external references.

2. How common are AI hallucinations in large language models (LLMs)?

AI hallucinations are common in large language models because they generate text based on probabilities, not factual verification. Even advanced models like GPT-4o and Claude 3.5 can produce inaccurate outputs, especially when asked about niche topics or in low-context situations.

3. Can hallucinations happen in non-text-based AI models like image generators?

Yes, hallucinations also occur in image-generation models, often as unrealistic or nonsensical elements in the output – such as extra limbs or incorrect cultural representations – caused by biases or gaps in the training data.

4. Are hallucinations more common in certain languages or cultures due to training data biases?

Hallucinations are more common in underrepresented languages or non-Western cultural contexts because most LLMs are trained primarily on English and Western-centric data, leading to less culturally nuanced responses.

5. What industries are most at risk from the consequences of AI hallucinations?

Industries like healthcare, finance, law, and customer service are most at risk from AI hallucinations, as inaccurate outputs in these fields can lead to compliance violations, financial loss, legal missteps, or damage to user trust and brand reputation.

.webp)